Note

Test Round Released!

Sample Dataset and Evaluation Tools will be released at 5th August.

Schedule

Event |

Date |

Release Initial Dataset & Eval Tools |

01 Aug 2023 |

Release Final Competition Set |

15 Sep 2023 |

Submission Close |

24 Sep 2023 |

Winners Notified |

25 Sep 2023 |

Winners Presentations |

01 Oct 2023 |

Competition Background

For decades, place recognition has been applied to a variety of localization and navigation tasks. However, only a few methods have been proposed for large-scale map assembling. With the advancement of autonomous driving, last-mile delivery, and multi-agent cooperation, there is a significant demand for efficient and accurate large-scale, crowd-sourced map updating. In this competition, General Place Recognition (GPR) for Autonomous Map Assembling, we provide a comprehensive evaluation platform for large-scale LiDAR/IMU datasets. These datasets have been collected repeatedly at different times in a variety of environments (city, park), with varying overlaps. The goal is to evaluate the data association ability between trajectories that exhibit overlapping regions, without any GPS assistance.

Dataset Overview

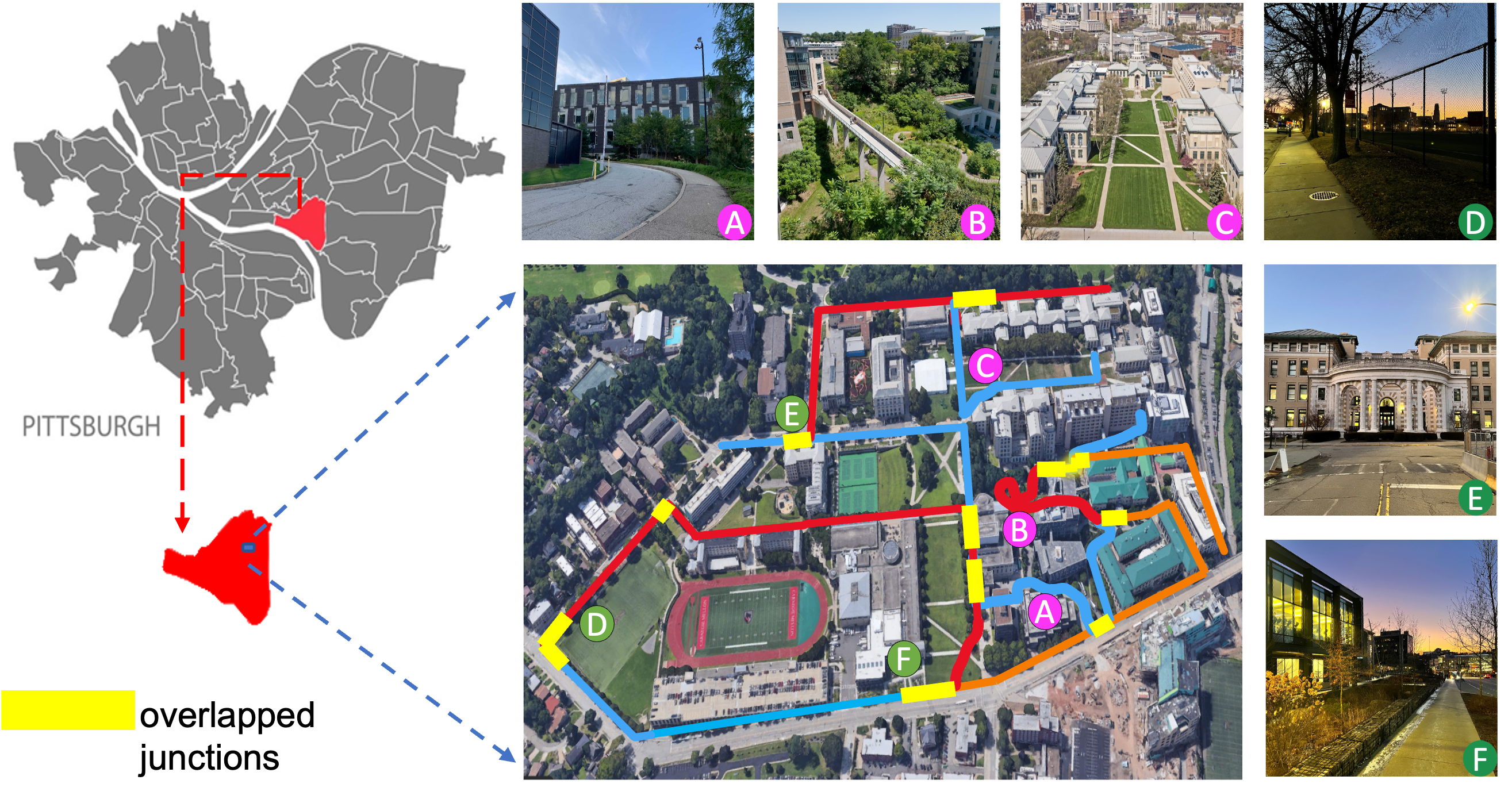

- 10 trajectories including 80 real-world sequences collected from campus of Carnegie Mellon University

- For each trajectory, we traversed 8 times including 2 forward sequences and 2 backward sequences during day-light and 2 forward and 2 backward sequences during night-light.

Participate the Competition

The performance is evaluated on AIcrowd, please sign up or log in to register the competition.

[ICRA2022 & IROS2023] General Place Recognition: City-scale UGV Localization

About the Test Set

The test dataset contains data selected from 10 trajectories of the Alita dataset containing both same direction and reverse direction revisits.

Download Link

Please download the test set from DropBox

Data Structure

PCD

├── 1-500.zip

├── 501-1000.zip

├── 1001-1500.zip

├── ...

└── 6001-6306.zip

The test set includes 6036 submaps. Each submap consists of points queried within 50m.

Submission and Evaluation

The objective of this competition is to assess the effectiveness of place recognition methods in scenarios involving overlapping trajectory areas. We will evaluate participants’ submissions using both Top1 and Top5 recall metrics, and the final competition rankings will be determined based on the Top1 recall score.

We kindly request participants to unzip all provided files and convert the point cloud data into global descriptors while preserving the original order. For instance, the global descriptor for “1.pcd” should correspond to index 0, “2.pcd” to index 1, and so forth. Submissions should be the standard binary file format using Numpy (.npy).

Sample Dataset

The sample dataset contains 4 trajectories

- For each selected trajectory, two forward sequences and one backward sequence will be provided.

- For each sequence within the same trajectory, the intra-sequence poses and inter-sequence poses are provided.

Data Structure

Sample ----------------------------> sample tracks for self evaluation

├── train_1

│ ├── DATABASE

│ │ ├── clouds

│ │ │ ├── time_stamp.pcd ----> submap

│ │ │ ├── ...

│ │ ├── poses_inter.csv -------> odometry in DATABASE coordinate

│ │ └── poses_intra.csv -------> odometry

│ └── QUERY

│ ├── forward

│ │ ├── clouds

│ │ │ ├── time_stamp.pcd

│ │ │ ├── ...

│ │ ├── poses_inter.csv -> odometry in DATABASE coordinate

│ │ └── poses_intra.csv -> odometry

│ └── backward

├── train_2

├── train_3

└── train_4

- For each sequence:

- clouds: This folder contains submap sliced from the global map generated by SLAM, each submap includes points queried within 50m.

- poses_intra.csv: Odometry information generated by SLAM, the mean distance between adjacent poses is around 1m.

- poses_inter.csv: Odometry information generated by Interactive-SLAM, the mean distance between adjacent poses is around 1m. All the poses are within the coordinate of DATABASE.

- In the provided *.csv files, the timestamp value located in the first column of each row serves as a unique identifier. This identifier is used to link the row’s data with a correspondingPCD file in the ‘clouds’ directory. The matching PCD file have the same name as this timestamp value. For instance, if a row contains odometry information with a timestamp value of ‘123456789’, a corresponding PCD file named ‘123456789.pcd’ should be present in the ‘clouds’ directory.

Download Link

Network Training

Participants are free to use any dataset to train their networks and fine-tune their networks with the sample dataset provided.

Network Self-evaluation

The performance of the place recognition can be evaluated in both forward revisit and backward revisit manner. Example evaluation codes are provided to calculate the recalls on both manner.

Before running the code,

- please modify the VAL_DATA_PATH and VAL_TRAJ in evaluate.py.

- please substitute the default model in evaluate.py.

pip3 install -r requirements.txt

python3 evaluate.py

Contact

Please send emails to ryanzhao9459@gmail.com for any questions about the competition.