Introduction

We believe that an ideal place recognition dataset for long-term autonomy should fulfill the following criteria:

- Evaluation on realistic and dynamic environments rather than simplified scenarios or simulations.

- Coverage of both small-scale, large-scale and overlapped tracks.

- Inclusion of diverse environmental conditions and sensor setups.

- Facilitation of benchmarking for various recognition tasks.

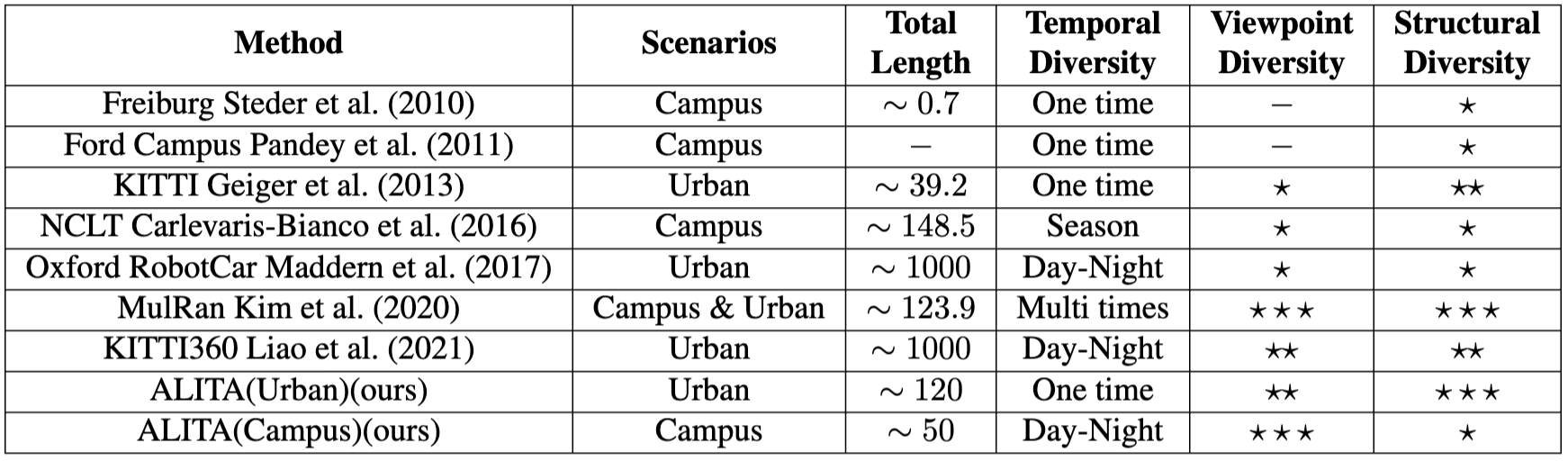

We introduce a long-term place recognition dataset designed for mobile localization in large-scale dynamic environments. The dataset features a campus-scale track with recordings from a LiDAR device and an omnidirectional camera across 10 trajectories, each recorded 8 times under varying illumination conditions, and a city-scale track recorded solely with a LiDAR device over a 120 km trajectory covering diverse urban areas. It includes 200 hours of raw data with ground truth positions refined through General ICP-based point cloud methods. This dataset aims to identify methods with high recognition accuracy and robustness, supporting long-term autonomy in robotic systems.

Dataset Description

ALITA dataset is composed by two dataset:

- Urban dataset, which records LiDAR data inputs in a city-scale urban-like area for 50 segments and 120km trajectory in total.

- Campus dataset, recorded under a campus-scale environment, where we gathered the omnidirectional visual inputs and LiDAR inputs on 10 different trajectories for 8 repeated times, under different illuminations and viewpoints; this dataset targets long-term localization challenge.

Compared to existing datasets

- Our Urban dataset covers variant 3D scenarios for comprehensive 3D place recognition evaluation and multi-session SLAM.

- Our Campus dataset repeatedly covers diverse campus areas with dynamic objects, illumination, and viewpoint differences, which is suitable to evaluate long-term re-localization or incremental learning ability.

Data Format

- Raw Data

. └── Rosbag/ ├── Urban/ │ ├── sensor_01.bag // rosbag with two topics : /imu/data, /velodyne_packets │ ├── ... // representing IMU and LiDAR respectively. │ └── sensor_50.bag └── Campus/ ├── Traj_01/ │ ├── day_forward_1.bag // rosbag with three topics : /imu/data, /velodyne_points and /camera/image │ ├── day_forward_2.bag // representing IMU, LiDAR and LiDAR respectively. │ ├── day_back_1.bag │ ├── day_back_2.bag │ ├── night_forward_1.bag │ ├── night_forward_2.bag │ ├── night_back_1.bag │ └── night_back_2.bag ├── ... └── Traj_10 - Processed Data

. └── Dataset/ ├── Urban/ │ ├── Traj_01/ │ │ ├── CloudGlobal.pcd // Global map │ │ ├── poses.csv // Key poses generated by SLAM │ │ ├── correspondences.csv // Correspondences between the poses in two trajectories with overlaps │ │ ├── Clouds/ // Submap generated by querying points within 50 meters │ │ │ ├── <timestamp>.pcd // centered as each pose from the global map. │ │ │ └── ... │ │ └── gps.txt // Recorded GPS data │ ├── ... │ └── Traj_50 └── Campus/ ├── Traj_01/ │ ├── day_forward_1/ │ │ ├── CloudGlobal.pcd │ │ ├── poses_intra.csv // Poses under the global coordinate of day_forward_1 within the same trajectory │ │ ├── poses_inter.csv // Key poses generated by SLAM │ │ ├── Clouds/ │ │ │ ├── <timestamp>.pcd │ │ │ └── ... │ │ └── Panoramas/ // An omnidirectional picture with a resolution of 1024 × 512 │ │ ├── <timestamp>.png │ │ └── ... │ ├── day_forward_2 │ ├── day_back_1 │ ├── day_back_2 │ ├── night_forward_1 │ ├── night_forward_2 │ ├── night_back_1 │ └── night_back_2 ├── ... └── Traj_10

Dataset Release

- Raw Data - Download Link

- Processed Data (human-parseable data) - Download Link

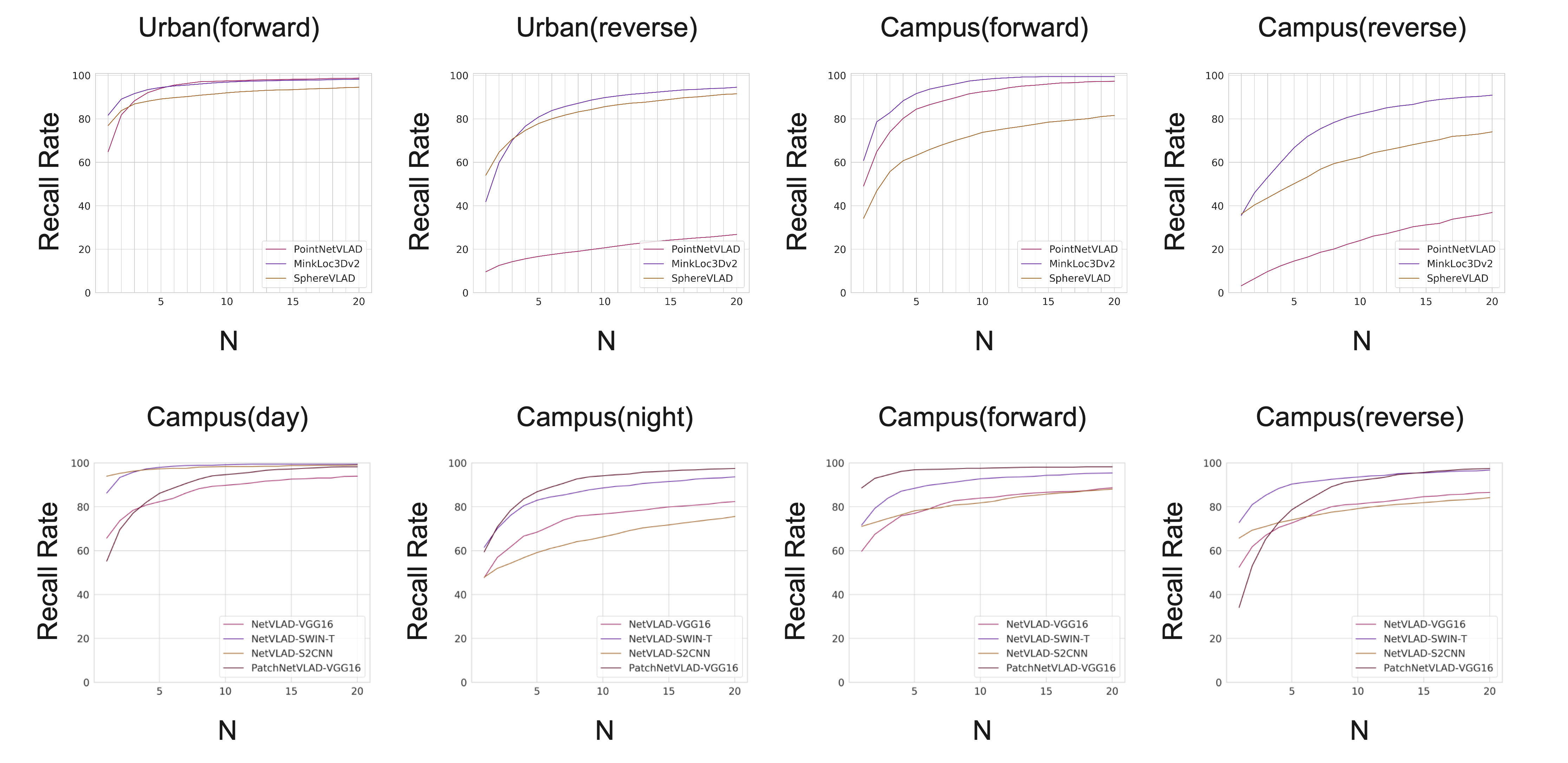

Benchmark Experiments

Publications

BibTeX:

@article{yin2022alita,

title={ALITA: A Large-scale Incremental Dataset for Long-term Autonomy},

author={Yin, Peng and Zhao, Shiqi and Ge, Ruohai and Cisneros, Ivan and Fu, Ruijie and Zhang, Ji and Choset, Howie and Scherer, Sebastian},

journal={arXiv preprint arXiv:2205.10737},

year={2022},

url={https://github.com/MetaSLAM/ALITA}

}

Contact

- Peng Yin: (hitmaxtom [at] gmail [dot] com)